Abstract

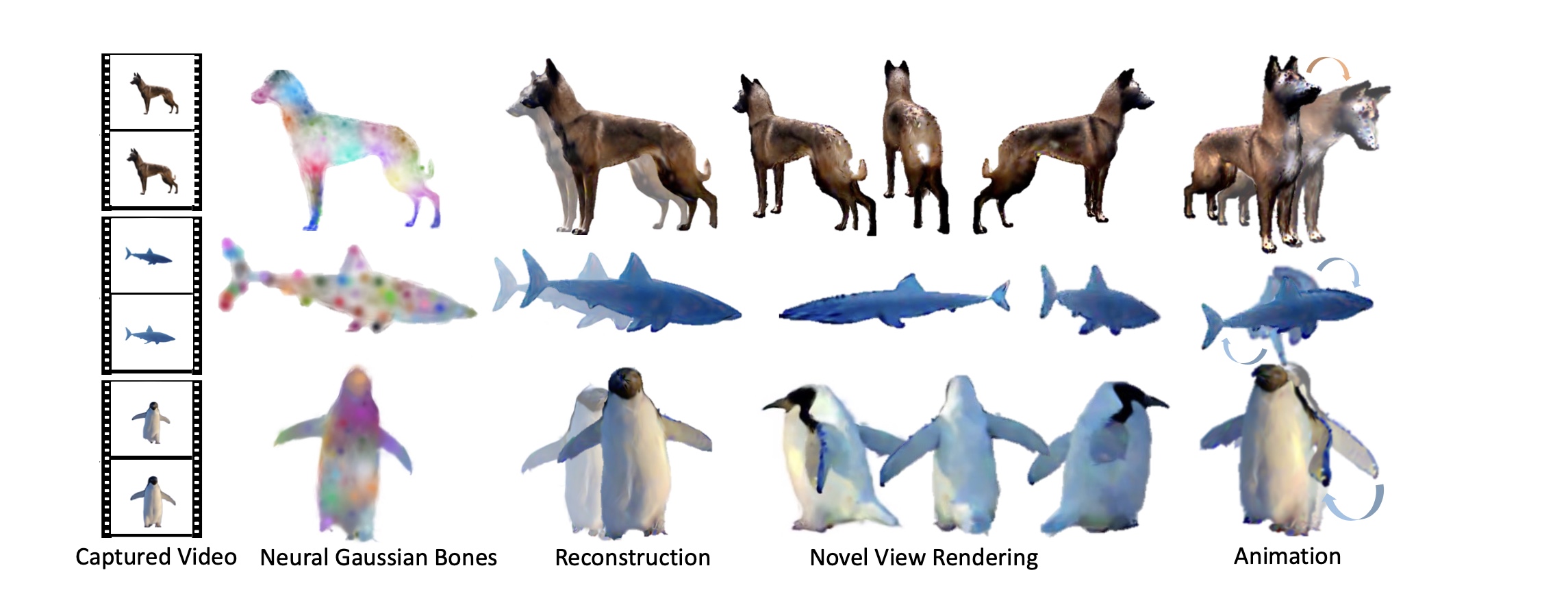

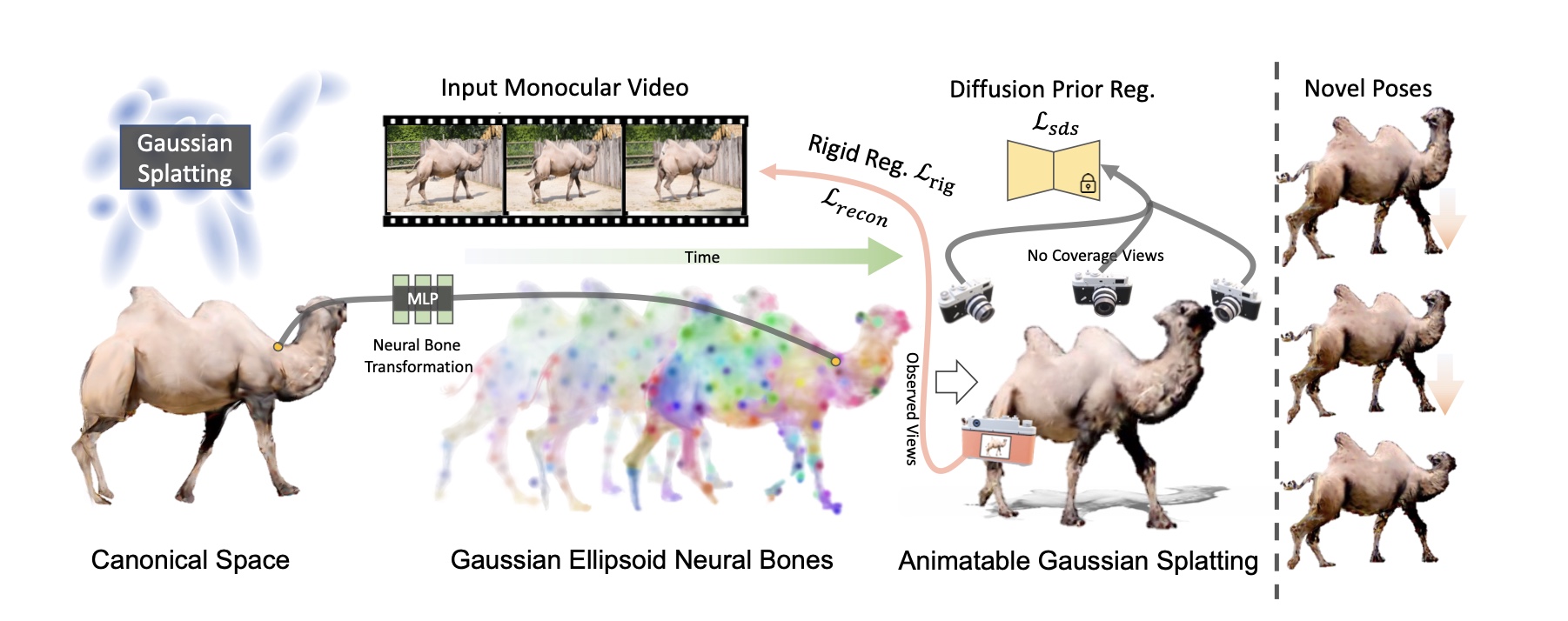

Animatable 3D reconstruction has significant applications across various fields, primarily relying on artists' handcraft creation. Recently, some studies have successfully constructed animatable 3D models from monocular videos. However, these approaches require sufficient view coverage of the object within the input video and typically necessitate significant time and computational costs for training and rendering. This limitation restricts the practical applications. In this work, we propose a method to build animatable 3D Gaussian Splatting from monocular video with diffusion priors. The 3D Gaussian representations significantly accelerate the training and rendering process, and the diffusion priors allow the method to learn 3D models with limited viewpoints.We also present the rigid regularization to enhance the utilization of the priors. We perform an extensive evaluation across various real-world videos, demonstrating its superior performance compared to the current state-of-the-art methods.

Video

Comparisons

Input

Banmo

Ours

BibTeX

@misc{zhang2024bags,

title={BAGS: Building Animatable Gaussian Splatting from a Monocular Video with Diffusion Priors},

author={Tingyang Zhang and Qingzhe Gao and Weiyu Li and Libin Liu and Baoquan Chen},

year={2024},

eprint={2403.11427},

archivePrefix={arXiv},

primaryClass={cs.CV}

}